Misinformation on social media exacerbates political polarization

During the 2016 presidential election, operators of the pizza parlor Comet Ping Pong began receiving threats from right-wing activists who erroneously believed the shop was the center of a pedophile sex ring involving presidential candidate Hillary Clinton and other liberal political elites. On Dec. 4, 2016, a 28-year-old man from North Carolina came to the pizza parlor with a rifle to “rescue the children” after seeing numerous posts spread on extremist sites and social media apps. These posts, often using the hashtag “#pizzagate,” propagated the falsehoods being spread in right-wing circles about the shop. #Pizzagate typifies the real-life impacts of fake news disseminated through online media. It can promote polarized political views and sometimes even threaten democracy. Given these dire consequences, students must make an effort to work on their media literacy skills to become responsible citizens.

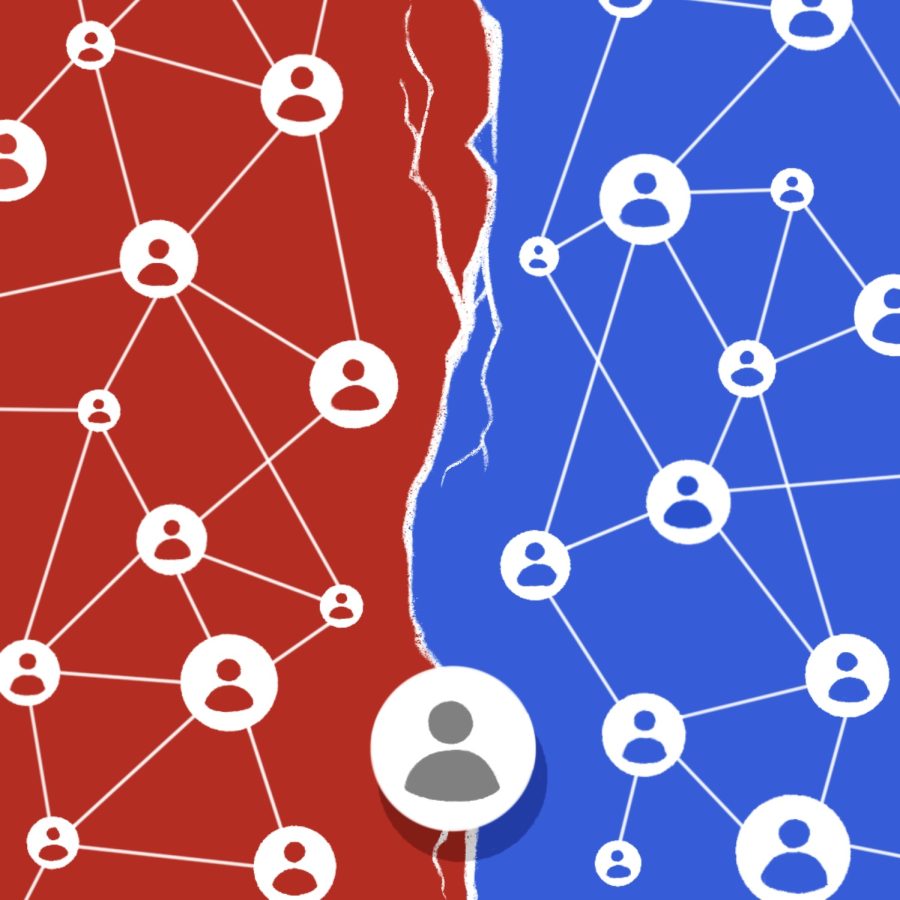

Before working to combat misinformation, it is essential to understand what political polarization truly is, as well as how social media has contributed to it. Britannica describes political polarization as “extreme and long-lasting partisanship in a two-party system that results in the division of a country’s entire population into two diametrically opposed political camps.” According to social studies teacher Laurel Howard, as this polarization intensifies, the incumbent party’s followers behave in a more autocratic manner to stay in power. On the other side, opponents are more willing to resort to undemocratic means to undermine their potence. Consequently, those on the opposite extremes of the political spectrum tend to be less likely to work with one other on bipartisan efforts. This is relevant now more than ever: A Pew Research Center analysis from 2022 found that, on average, Democrats and Republicans are ideologically farther apart today than at any time in the past 50 years. This dynamic can severely damage democracy and encourage citizens to become blindly loyal to their parties.

In the U.S., extreme points of view have been easily disseminated on social media in the digital age. This contributes to the widening gap between political parties by providing avenues for extreme groups to circulate fake news. In the #pizzagate conspiracy, the false theory was promoted on social media through the use of apps such as Reddit and Facebook. More recently, far-right groups helped organize the insurrection at the Capitol on Jan. 6, 2021, over social media sites.

Social media algorithms also make it easier for the average citizen to confirm their own political beliefs, rather than explore others, thus facilitating further polarization. These algorithms personalize the content that users see on the app based on their past behavior and interactions, using previously liked or shared posts to continue to recommend similar or related content that the user might want to engage with. This can lead to a bubble (or echo-chamber) effect in which users are only exposed to content that reinforces their beliefs, contributing to intense confirmation bias. Interacting with different points of view necessitates strong media literacy skills so students can draw their own opinions. Interacting with others who hold diverse perspectives, sentiments and experiences can extend our knowledge, shape our viewpoints and enhance our societal connections. However, if individuals opt out of these conversations, instead isolating themselves from those with different political beliefs, it only fosters and encourages division.

In a 2021 survey conducted by research foundation Reboot, 27% of respondents said social media made them “less tolerant” of people who have opposing points of view. When people fail to interact with those with opposing viewpoints, they lose an essential part of their critical thinking abilities. As future—or current—voters, it is crucial that Gunn students learn how to be responsible and knowledgeable citizens who are able to engage with those that may have beliefs differing from their own.

A significant part of this issue is learning to identify the information’s credibility. There are several ways to assess a piece of information’s credibility, whether it be an Instagram post discussing recent news or a retweet of an article. First, simply slow down: When skimming through an article, one may forget to be analytical and critical of the writing and might blindly follow disinformation. It is crucial to determine whether the information is echoed in multiple sources. For example, if one finds something on social media, they should look to see if there is similar content in other reputable sources, such as the Associated Press or perhaps a textbook. Additionally, people tend to get pulled in by statistics that lack context. For instance, if a news source states that 20% of people are affected by an issue, take a moment to check the subtext and see how many people were surveyed. 20% of a small sample of the population is different from 20% of the entire population. Put the statistic into perspective. Finally, it is important to assess word choice in the media. When reading the information, search for inflammatory words, specifically adjectives, that may imply biases and specific narratives that the source is trying to push. Word choice, specifically in regard to adjectives, can shift the entire meaning of a text.

These steps may seem minuscule, yet their impacts are great. Making these efforts to improve media literacy can stop the mindless resharing of the type of disinformation that pushes our society further and further apart.

Your donation will support the student journalists of Henry M. Gunn High School. Your contribution will allow us to purchase equipment and cover our annual website hosting costs.

Senior Annabel Honigstein is a forum editor for The Oracle. She enjoys reading outdoors, drinking absurd amounts of coffee and traveling.

Vikram Valame • Apr 19, 2023 at 11:04 pm

This is a good point to make. It’s even more relevant as AI increases its capabilities rapidly.

One of the worst examples of Artificial Intelligence has got to be the stunning amount of comments on news media sites spreading misinformation. Pretty much every comment on news websites today (even reputable ones) is AI generated nonsense.